Top of the Stack

Self Centralizing?

2022-06-24 - Home Server - Remote Access

I have been pretty busy the last couple of days getting my home server configured. The reason I'm converting my old workstation to a server is because I recently purchased a pretty beefy laptop. I've recently found myself in need of more mobile computing power for projects and recreation [as much as I love my Thinkpad X220, it doesn't cut it when I am trying to get some games in with the boys].

This week I did quite a bit of research on software that would allow me to be more self sufficient [in a digital sense, won't be any help if the grid goes down]. I was able to install some software [with some troubleshooting] but have yet to test them enough to conclude whether they are overkill for my purposes. A summary of what I've done

- Install Hypervisor - Proxmox

- Purchase and reconfigure storage for redundancy and increased capacity

- Install and configure a remote work environment

- Install a NAS OS to trial - Open Media Vault

I'll do a quick rundown of all these points.

Hypervisor

A hypervisor, from my understanding, is the software that hosts and manages the guest OSs [guest OS is the fancy way of saying virtual machine/container/etc]. There are Type 1 and Type 2 hypervisors. Type 2 is software like Virtual Box that run on top of another OS. For example, you have Windows installed and would like to try out Linux. You can install VirtualBox ON Windows and install Linux within VirtualBox. The point is the Virtualbox has to go through Windows to interact with the bare metal. A Type 1 hypervisor IS the OS that is running without other software between it and the bare metal. That's why Type 1 hypervisors are sometimes called bare metal hypervisors. The benefits of a Type 1 hypervisor is less overhead supporting the host OS as the software is typically extremely lightweight.

I settled on using Proxmox which is a bare metal hypervisor. This is so I can stage and deploy a good number of containers and VMs without being throttled by the host OS. Additionally, Proxmox is an open-source project which is always a plus [pretty close to a must in my book].

Storage

My old tower had two 512 GB SSDs for the main partitions for both my Linux [which was my daily driver during the pandemic] and Windows [which was basically just for games] install. It also had a 1TB HDD which was used for storage on my Linux install.

Because I want to implement a self-hosted cloud storage solution and/or a media server, I wanted not only to increase the capacity, but also wanted to implement some redundancy in case of a drive failure. So I went out and got myself two more 4TB HDDs. I actually 3d printed two hard drive caddies for my case that I found on Thingiverse. I had another two 2.5 inch drive caddies that where meant for a different case but I just secured them where I could fit them with some zip ties.

So storage in total currently consists of two 512 GB SSDs - one that will be used as a boot partition for my hypervisor and the other will be used as a cache, scratch, or boot partition for the guest OSs - two 4TB HDDs - which will be configured to be a single 4TB mirrored volume for data storage [basically this means the data will be written twice, once on each drive, to ensure that failure of one drive wont lead to any data loss] - and one 1TB HDD - which will just be used for slow, low priority data [no redundancy, no speed, kinda the odd one out].

I implemented the redundancy listed above using ZFS. ZFS is a filesystem which allows for the disks to be collected into storage pools which then can be divided cleanly into distinct sets of data. I find myself always returning to this video by Linda Kateley that explains the system extremely clearly.

Here are the list of commands I used to create the configuration mentioned above.

zpool create fastpool /dev/sda

zpool create safepool mirror /dev/sdb /dev/sdc

zpool create badpool /dev/sdd

I created three pools, one called fastpool - which is the other SSD that isn't my boot drive for Proxmox - another called safepool - which is the mirrored 4TB storage pool - and badpool - which is the one that is neither fast nor redundant.

Installed Operating Systems

I fired up two guest operating systems to get myself started. One is an Arch Linux installation that copies my dot files from my old workstation. This just means that my configuration - window manager, terminal emulator, keybindings, etc - are transferred from my old daily driver. The other is a instance of Open Media Vault where I'll be storing my data [media server data?].

For the workstation install I downloaded the Arch Linux ISO and uploaded it to homeserv through the Proxmox web gui [which is reached on port 8006 by default]. I chose to make this a container because they are a little more lightweight and I don't plan on doing any intense computing on it. I'll have to delve deeper into the significant difference between VM and CT are in the future.

I'll give the details on the OpenMediaVault installation in a later post because there are some bugs in the installer that required some interesting workarounds [and this post is 300000 lines long and a day late sooooo....].

I'll try to write up some guides this weekend to document the entire process while its still fresh in my mind.

Cloudflare Died!

2022-06-21 - Web Development - Administration

What unfortunate timing! I was about to writing up this post when I lost access to my VPS because Cloudflare went down. Here is the Cloudflare postmortem where they discuss what happened.

It looks like they where trying to "[roll] out a change to standardize [their] BGP" and, from my understanding [which I would take with about a cup of salt], moved the reject condition above "site-local terms". So the request would be rejected before being able to reach origin servers [as opposed to an edge or caching server].

I might look more into BGP because I don't know about it at all. One for the stack I suppose.

Finally Creating a HomeLab

2022-06-16 - Home Lab - Administration

I am currently in the process of setting up my home lab and am using my old computer as my new server. The plan is to make an environment where I can mess with all things networking and delve into self hosting [the more in my control, the less I can blame anyone else when everything explodes].

In addition to configuring and researching the new server [or old server depending on how you look at it], I've got some other projects brewing at the moment. I've been experimenting with creating a remote computing workspace that I can access from outside my network. I am also resurrecting my 3d printer that has been idle for about a year. I'll go into my setup and the modifications I've made to the stock Ender 3 Pro that I have.

Also here is something that I made today.

I don't want to give to much info away but long story short I had a class on welding today where I made this little crab. I've never welded before today and it was pretty cool. Defiantly a lot more to explore and learn on that front.

Hope that holds you over till Monday!

Picky Pasta Person makes Pesto Ravioli

2022-06-09 - Cooking - Pasta

Ingredients and Equipment Needed

Dough

- Rolling pin or pasta roller [something to smoosh the dough into a sheet]

- 2 cups of all purpose flour

- 1/2 teaspoon kosher salt

- 3 eggs

- 2 egg yolks

Pesto Sauce

- Blender/Food processor [maybe a SUPER fast chopper man with a knife]

- 1 cup basil

- 3 cloves of garlic

- 1/3 cup grated Parmesan

- 1/2 teaspoon kosher salt

- 1/3 cup of olive oil

Ravioli Filling

- 12 ounces of ricotta

- 1/2 cup shredded mozzarella

Recipe

Make the dough first beacuse it needs to sit for 30 minutes

- Mix flour and salt together

- Beat eggs and egg yolks and add to the flour

- Mix until fully incorporated then knead for about 10 minutes- should be firm but not too grainy

- Wrap in plastic wrap and let sit for 30 minutes

While dough sits start with the Pesto Sauce needed for the filling

- Combine all the pesto ingredients into blender/food processor and let'er rip[I used a blender and just make sure it doesn't purée the mixture]

- That's it, make sure it tastes good by making sure it doesn't need some extra salt

Making the Filling

- Mix your pesto, the ricotta, and the mozzarella together

- That's it! Cooking is easy-peazy

Back to the dough and putting everything together

- Use the pasta roller or rolling pin to flatten the dough into a sheet [add some flour to your rolling device so the dough doesn't get stuck or sticky]

- Cut out ravioli sized squares [whatever your heart says that size is]

- Put a dollop of your filling in the middle of one of your squares

- Very lightly wet the edges of the square with the dollop and another square[makes the edges sticky again]

- Lay the undolloped square on top the dolloped square and gently pinch the edges together

- Then use a fork to smoosh the edges more and give them a classic ravioli look

- Bring a pot of water to a boil and cook a few of the ravioli at a time for about 4 minutes [they can stick together if they touch while cooking which isn't too big of a deal but crowding is generally a bad idea from my xp]

- Then plate and add a tiny bit of butter and Parmesan.

- Eat the food

Log

I really dislike dried pasta. I feel like a lot of recipes say to cook pasta al dente but, if dried, you get a hard, chalky, stale center. I also think that making your own pasta dough is typically fast, easy, and tastes infinitely better.

Given this is my first Cooking post [my first anything other than Web Development post actually], I'll preface it with my experience with cooking [or the lack thereof]. In all honesty, I just recently started cooking regularly. In the past I would get inspired to cook something but get discouraged when it didn't turn out as good as I wanted [even if the liars in my life said it was good], and would go back to grabbing Taco Bell after work. Recently I decided that cooking should be approached like any other skill - starting at the basics and following guides until you gain the knowledge and confidence to experiment [and filled with feeling like you are terrible regardless of how much you progress]. So because I still am very much a beginner, I'll be following a lot of online recipes until I gain the confidence to refactor dishes for myself.

With that out of the way, I'll continue onto one of the meals I made this week.

I found a recipe for Easy Homemade Pesto Ravioli which used store bought pesto sauce and the link to the ravioli dough 404'd. So because I knew I wanted to make my own dough [given my rant at the start] I thought why not keep everything Homemade, including the pesto sauce.

So I found the recipe for ravioli dough and pesto sauce and put all three of these recipes together.

It turned out pretty tasty and I especially like having the light butter as the sauce topping as I always felt the whole point of ravioli was to have the flavor come from the stuffing and not the sauce on top.

I realize how stupid I am now for not taking pictures of the process so you can judge if you think it looks good but uhhh... yeah. I didn't take any pictures so I guess you just have to trust me that it was good.

Online!

2022-06-06 - Web Development - Administration

Hopefully you can see this because that means that I didn't mess anything up when updating the site! I'll quickly recap what I did over the last week and clarify what's in the pipeline for this upcoming week.

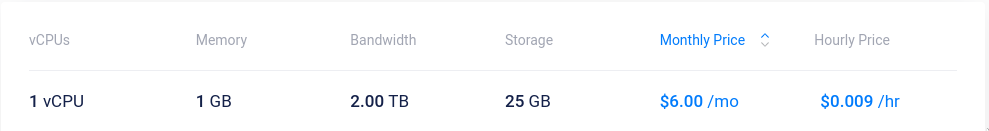

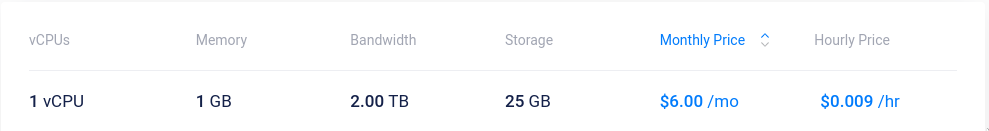

So I ended up destroying and remaking the Vultr VPS [Virtual Private Server if I didn't clarify that previously] so that I could have a fresh, clean environment to start messing around in. I decided to go with their smallest, and cheapest plan to start things off [I think it can handle the traffic that I expect to start "pouring" in].

Vultr Cloud Pricing

Vultr Cloud Pricing

I found this video that explained how to calculate how much needed bandwidth I needed to budget for. Again I don't think I need much [something tells me I'm the only one checking in on this website 100 times a day]. I stuck with a Debian distribution for no reason in particular other than it's what I used previously on other VPSs [if your unfamiliar with Linux and Linux distributions I'll make some intro to Linux guides in the future that'll explain all the common nerd jargon].

I grabbed the domain name from dynadot and changed the DNS records to have it point to the IP of the VPS. I'll write up a full guide for this in a bit [honestly it's pretty easy]. I then installed nGinx, changed the config files to point to the html/css files, and started the service [kinda skipped the nitty gritty but again, guide incoming].

I was able to visit the site at this point from a client device but still had to set up SSL [Secure Sockets Layer] so that the connection was encrypted. Apparently it was pretty uncommon in the past to have encrypted connections on personal websites but that was changed with the help of the EFF with let's encrypt which offers free, open certificates [pretty cool of them honestly]. To make things even easier they also provide a python script(?) called certbot which automates the process of obtaining one of these ssl certificates. After I ran it I got the cool green lock icon in the address bar and was feeling pretty cool myself! :)

Those were the major updates for this week. Next week I'll be looking into hosting a git server to upload my code [including anything that I create for this website ie. html/css/scripting stuff] and a git visualiser. Hosting a git server seems easy enough but finding the right visualiser might need a bit more research. Right now the most promising candidate is stagit which is a static git page generator. Hope that works out.

The stretch goal for this week is the possibility to host videos with [maybe?] a peertube instance. Not sure how much of a pain and/or the bandwidth limitations will throttle the experience too much but it sounds useful to post, even short, videos demonstrating processes explicitly [if a picture is 1000 words than what does that make a video].

That's the update for the week.

Next TODOS! [Can be updated throughout the week]

Bottom of the Stack

2022-06-02 - Web Development - html/css

I'll start things off with the design goals of this very website and what I have done so far to get things going.

Unless things have changed drastically by the time you read this [which I doubt it has], it should be a fairly vanilla 1995 World Wide Web experience.

There are a couple reasons I chose to go this route.

Aside from the glaring hurdle that is my complete lack of any formal or informal web development experience, I like keeping the UNIX philosophy in mind when working on my projects [although manipulated to mean exactly what I want it to mean at any given time].

For those unfamiliar, the basic principle is to design programs to do one thing and one thing well.

I want this website to be a place where I throw my ideas into the void and can reference them later [and maybe share some of that knowledge to the few that find themselves here].

Although fun, interactive, dynamic features would be cool to look at, I think it, ultimately, restricts my ability to complete this goal effectively.

That isn't to say that I am completely against adding any scripting for the sake of learning or experimenting [this IS a hobby website after all], but I do want to be able to quickly document my progress without too much thought on aesthetic [aside from some font colors].

But let me get down to brass tacks, what have I done so far.

Well if I am being completely honest, we aren't even online yet.

I bought a domain name from dynadot and have a Vultr VPS [hosting some other things at the moment], but haven't set anything else up.

I'll look up some guides tomorrow [famous last words] to see how to get us up and running.

So that's what I haven't done.

What I have done is started getting comfortable with HTML/CSS.

I have written in other markup languages like Markdown and LaTeX so it wasn't too painful to pick up but getting used to syntax is something you can't really rush [maybe you can but I can't].

So, a few of the things I've learned so far:

- Basic HTML structure ie. Defining a page with "<!DOCTYPE html>" as well as <head>, <body>, and <html> tags.

- Other Basic HTML tags ie. <p>, <strong>, <em>, etc.

- How to link to a style sheet [a cascading one to be exact] with

<link rel="stylesheet" type="text/css" href="$(LOCATION OF THE STYLE SHEET)" />

- Defining specific tags with id, ie. id="name_of_tag"

- Basic CSS syntax

- Referencing specific tags by id with #$(ID_NAME)

Next TODOS! [Can be updated throughout the week]